CRITICAL//Consensus

"Can what is playing you make it to level-2?"

News and announcements of the library. No books here.

??Official Chinese channel: t.me/zlib_china_official

? https://z-library.sk

https://en.wikipedia.org/wiki/Z-Library

? https://twitter.com/Z_Lib_official

? https://mastodon.social/@Z_Lib_official

Last updated hace 1 año, 7 meses

Intel slava is a Russian News aggregator who covers Conflicts/Geopolitics and urgent news from around the world.

For paid promotions and feedback contact us at: @CEOofBelarus

Last updated hace 1 año, 1 mes

💫Welcome to the best book channel of Telegram.

✨Buy ads: https://telega.io/c/BooksHub25

✨Contact admin ➠ @Bookshub_contact_bot

✨ Copyright Disclaimer➠ https://telegra.ph/LEGAL-COPYRIGHT-DISCLAIMER-09-18

Links for 2025-02-12

AI:

-

LLMs can be used to discover interpretable models of human and animal behavior. A method, called CogFunSearch, adapts FunSearch, a tool that uses large language models (LLMs) in an evolutionary algorithm. The discovered programs can be interpreted as hypotheses about human and animal cognition, instantiating interpretable symbolic learning and decision-making algorithms. https://www.biorxiv.org/content/10.1101/2025.02.05.636732v1

-

LLMs Can Easily Learn to Reason from Demonstrations Structure, not content, is what matters https://arxiv.org/abs/2502.07374

-

NatureLM: Deciphering the Language of Nature for Scientific Discovery https://arxiv.org/abs/2502.07527

-

Evolution and The Knightian Blindspot of Machine Learning — The authors propose that ML can benefit from considering the temporal unfolding of an open world, using a diversity-and-filter approach to handle KU, and incorporating non-stationarity into foundation model pertaining. https://arxiv.org/abs/2501.13075

-

On the Emergence of Thinking in LLMs I: Searching for the Right Intuition https://arxiv.org/abs/2502.06773

-

ReasonFlux: Hierarchical LLM Reasoning via Scaling Thought Templates https://arxiv.org/abs/2502.06772

-

Training Language Models to Reason Efficiently https://arxiv.org/abs/2502.04463

-

“o3 can't multiply 10 digit numbers, but here is the acc of a 14m transformer that teaches itself how to do it, with iterative self-improvement” https://x.com/DimitrisPapail/status/1889755872642970039

-

Scaling Pre-training to One Hundred Billion Data for Vision Language Models https://arxiv.org/abs/2502.07617

-

Can 1B LLM Surpass 405B LLM? Rethinking Compute-Optimal Test-Time Scaling https://arxiv.org/abs/2502.06703

-

DeepScaleR: Surpassing O1-Preview with a 1.5B Model by Scaling RL https://pretty-radio-b75.notion.site/DeepScaleR-Surpassing-O1-Preview-with-a-1-5B-Model-by-Scaling-RL-19681902c1468005bed8ca303013a4e2 (but see this thread: https://x.com/DimitrisPapail/status/1889422843982524558)

-

8GB of high-quality reasoning math https://huggingface.co/datasets/open-r1/OpenR1-Math-Raw

AI politics:

-

'Possibly by 2026 or 2027 (and almost certainly no later than 2030), the capabilities of AI systems will be best thought of as akin to an entirely new state populated by highly intelligent people appearing on the global stage' https://www.anthropic.com/news/paris-ai-summit

-

Sam Altman says the $500 billion Stargate project will be dwarfed in a few years with $5 trillion AI compute clusters, despite the recent DeepSeek release https://youtu.be/oEdlwfD5vK8?si=UpmTkOCaUxmQYFc8&t=664

-

The Paris AI Anti-Safety Summit https://www.lesswrong.com/posts/qYPHryHTNiJ2y6Fhi/the-paris-ai-anti-safety-summit

-

Why Did Elon Musk Just Offer to Buy Control of OpenAI for $100 Billion? https://www.lesswrong.com/posts/tdb76S4viiTHfFr2u/why-did-elon-musk-just-offer-to-buy-control-of-openai-for

-

Meta Platforms is reportedly in discussions to acquire South Korean AI chip startup FuriosaAI. https://www.koreatimes.co.kr/www/tech/2025/02/129_392093.html

-

OpenAI set to finalize first custom chip design this year https://www.reuters.com/technology/openai-set-finalize-first-custom-chip-design-this-year-2025-02-10/

Science and Technology:

-

Princeton neuroscientists crack the code of how we make decisions https://pni.princeton.edu/news/2025/princeton-neuroscientists-crack-code-how-we-make-decisions

-

Physicists have built a new type of digital-analogue quantum simulator in Google’s laboratory, which can be used to study physical processes with unprecedented precision and flexibility. https://www.psi.ch/en/news/media-releases/unique-quantum-simulator-opens-door-to-new-research

-

Anduril Takes Over $22 Billion Contract to Build Technomancers for U.S. Army https://www.corememory.com/p/anduril-takes-over-22-billion-contract

-

Einstein Was Right – Euclid Just Captured Space-Time Warping in a Perfect Cosmic Ring https://www.esa.int/Science_Exploration/Space_Science/Euclid/Euclid_discovers_a_stunning_Einstein_ring

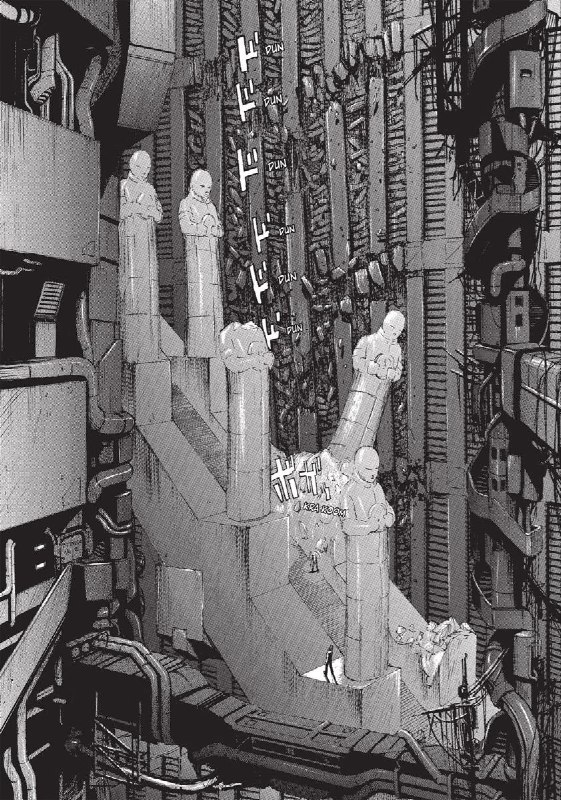

The people reading and writing cyberpunk fiction for the past 40+ years were more prepared for this era than anyone else.

ᴇɴᴅ ᴡᴏʀʟᴅꜱ, 2024

onespeg

News and announcements of the library. No books here.

??Official Chinese channel: t.me/zlib_china_official

? https://z-library.sk

https://en.wikipedia.org/wiki/Z-Library

? https://twitter.com/Z_Lib_official

? https://mastodon.social/@Z_Lib_official

Last updated hace 1 año, 7 meses

Intel slava is a Russian News aggregator who covers Conflicts/Geopolitics and urgent news from around the world.

For paid promotions and feedback contact us at: @CEOofBelarus

Last updated hace 1 año, 1 mes

💫Welcome to the best book channel of Telegram.

✨Buy ads: https://telega.io/c/BooksHub25

✨Contact admin ➠ @Bookshub_contact_bot

✨ Copyright Disclaimer➠ https://telegra.ph/LEGAL-COPYRIGHT-DISCLAIMER-09-18

](/media/attachments/bre/breakthebrokenconsensus/17423.jpg)