Github LLMs

@Raminmousa

Community chat: https://t.me/hamster_kombat_chat_2

Website: https://hamster.network

Twitter: x.com/hamster_kombat

YouTube: https://www.youtube.com/@HamsterKombat_Official

Bot: https://t.me/hamster_kombat_bot

Last updated hace 10 meses, 4 semanas

Your easy, fun crypto trading app for buying and trading any crypto on the market.

📱 App: @Blum

🤖 Trading Bot: @BlumCryptoTradingBot

🆘 Help: @BlumSupport

💬 Chat: @BlumCrypto_Chat

Last updated hace 1 año, 4 meses

Turn your endless taps into a financial tool.

Join @tapswap_bot

Collaboration - @taping_Guru

Last updated hace 11 meses, 2 semanas

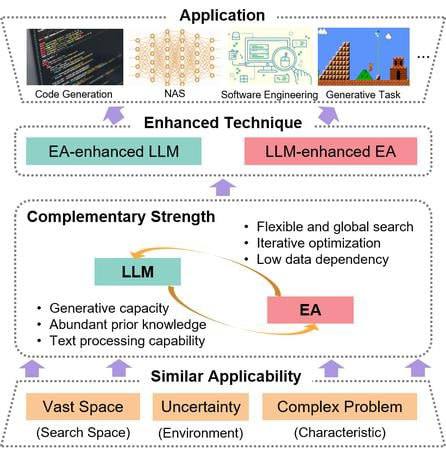

Evolutionary Computation in the Era of Large Language Model: Survey and Roadmap

Large language models (LLMs) have not only revolutionized natural language processing but also extended their prowess to various domains, marking a significant stride towards artificial general intelligence. The interplay between LLMs and evolutionary algorithms (EAs), despite differing in objectives and methodologies, share a common pursuit of applicability in complex problems. Meanwhile, EA can provide an optimization framework for LLM's further enhancement under black-box settings, empowering LLM with flexible global search capacities. On the other hand, the abundant domain knowledge inherent in LLMs could enable EA to conduct more intelligent searches. Furthermore, the text processing and generative capabilities of LLMs would aid in deploying EAs across a wide range of tasks. Based on these complementary advantages, this paper provides a thorough review and a forward-looking roadmap, categorizing the reciprocal inspiration into two main avenues: LLM-enhanced EA and EA-enhanced #LLM. Some integrated synergy methods are further introduced to exemplify the complementarity between LLMs and EAs in diverse scenarios, including code generation, software engineering, neural architecture search, and various generation tasks. As the first comprehensive review focused on the EA research in the era of #LLMs, this paper provides a foundational stepping stone for understanding the collaborative potential of LLMs and EAs. The identified challenges and future directions offer guidance for researchers and practitioners to unlock the full potential of this innovative collaboration in propelling advancements in optimization and artificial intelligence.

Paper: https://arxiv.org/pdf/2401.10034v3.pdf

Foundations of Large Language Models

📝 Table of Contents:

● Pre-training

● Generative Models

● Prompting

● Alignment

Tong Xiao and Jingbo Zhu

January 17, 2025

📃 Download from arXiv.

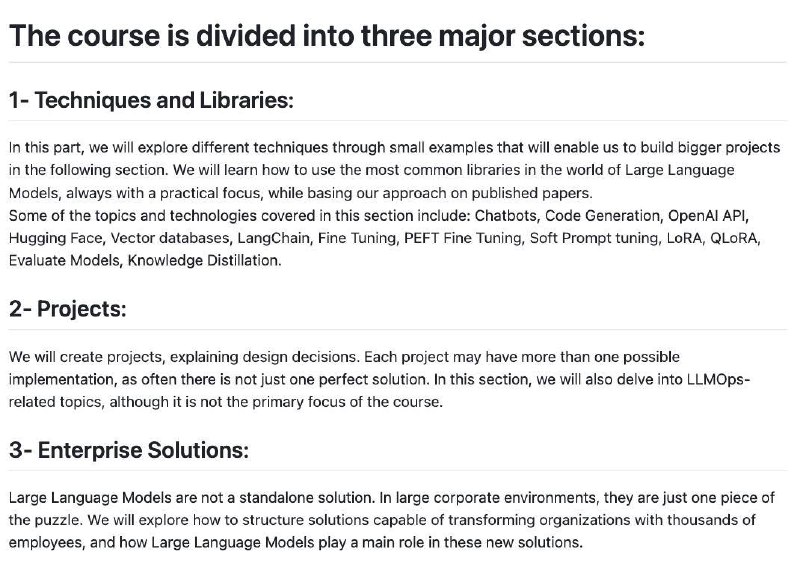

Large Language Models Course: Learn by Doing LLM Projects

🖥 Github: https://github.com/peremartra/Large-Language-Model-Notebooks-Course

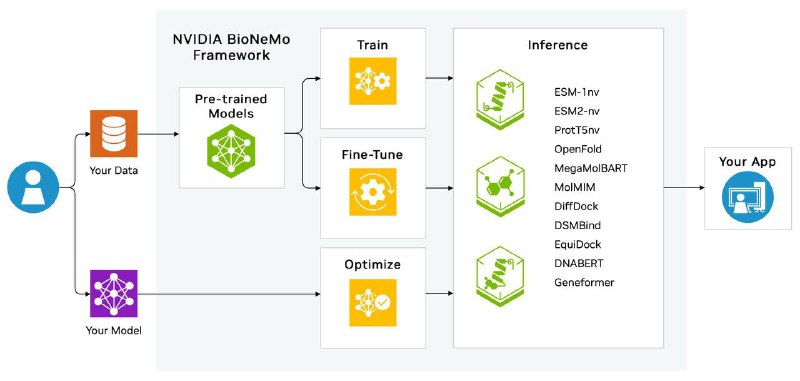

🌟 BioNeMo: A Framework for Developing AI Models for Drug Design.

NVIDIA BioNeMo2 Framework is a set of tools, libraries, and models for computational drug discovery and design.

▶️ Pre-trained models:

🟢 ESM-2 is a pre-trained bidirectional encoder (BERT-like) for amino acid sequences. BioNeMo2 includes checkpoints with parameters 650M and 3B;

🟢 Geneformer is a tabular scoring model that generates a dense representation of a cell's scRNA by examining co-expression patterns in individual cells.

▶️ Datasets:

🟠 CELLxGENE is a collection of publicly available single-cell datasets collected by the CZI (Chan Zuckerberg Initiative) with a total volume of 24 million cells;

🟠 UniProt is a database of clustered sets of protein sequences from UniProtKB, created on the basis of translated genomic data.

🟡 Project page

🟡 Documentation

🖥 GitHub

Fine Tuning LLMs with Hugging Face LLMs Code

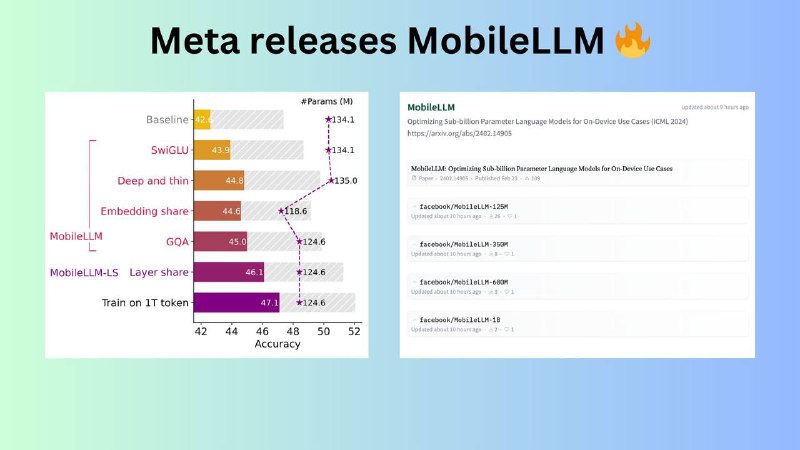

⚡️ MobileLLM

🟢MobileLLM-125M. 30 Layers, 9 Attention Heads, 3 KV Heads. 576 Token Dimension;

🟢MobileLLM-350M. 32 Layers, 15 Attention Heads, 5 KV Heads. 960 Token Dimension;

🟢MobileLLM-600M. 40 Layers, 18 Attention Heads, 6 KV Heads. 1152 Token Dimension;

🟢MobileLLM-1B. 54 Layers, 20 Attention Heads, 5 KV Heads. 1280 Token Dimension;

LLM-based agents for Software Engineering "Large Language Model-Based Agents for Software Engineering: A Survey".

Community chat: https://t.me/hamster_kombat_chat_2

Website: https://hamster.network

Twitter: x.com/hamster_kombat

YouTube: https://www.youtube.com/@HamsterKombat_Official

Bot: https://t.me/hamster_kombat_bot

Last updated hace 10 meses, 4 semanas

Your easy, fun crypto trading app for buying and trading any crypto on the market.

📱 App: @Blum

🤖 Trading Bot: @BlumCryptoTradingBot

🆘 Help: @BlumSupport

💬 Chat: @BlumCrypto_Chat

Last updated hace 1 año, 4 meses

Turn your endless taps into a financial tool.

Join @tapswap_bot

Collaboration - @taping_Guru

Last updated hace 11 meses, 2 semanas

](/media/attachments/dee/deep_learning_proj/16.jpg)