DataCamp

Community chat: https://t.me/hamster_kombat_chat_2

Website: https://hamster.network

Twitter: x.com/hamster_kombat

YouTube: https://www.youtube.com/@HamsterKombat_Official

Bot: https://t.me/hamster_kombat_bot

Last updated 11 months, 1 week ago

Your easy, fun crypto trading app for buying and trading any crypto on the market.

📱 App: @Blum

🤖 Trading Bot: @BlumCryptoTradingBot

🆘 Help: @BlumSupport

💬 Chat: @BlumCrypto_Chat

Last updated 1 year, 4 months ago

Turn your endless taps into a financial tool.

Join @tapswap_bot

Collaboration - @taping_Guru

Last updated 11 months, 4 weeks ago

? DataCamp Free Access Week is LIVE! ?

Get 100% free and unlimited access to our full course library.

No catch, no card details needed. Just hit the link and explore everything DataCamp has to offer.

Sign up now ? ow.ly/P6Mt50TZif8

It took me 6 weeks to learn overfitting. I'll share in 6 minutes (business case study included). Let's dive in:

-

Overfitting is a common issue in machine learning and statistical modeling. It occurs when a model is too complex and captures not only the underlying pattern in the data but also the noise.

-

Key Characteristics of Overfitting: High Performance on Training Data, Poor Performance on Test Data, Overly Complex with many parameters, Sensitive to minor fluctuations in training data (not robust).

-

How to Avoid Overfitting (and Underfitting): The goal is to get a model trained to the point where it's robust (not overly sensitive) and generalizes well to new data (unseen during model training). How we do this is to balance bias and variance tradeoff. Common techniques: K-Fold Cross Validation, Regularization (penalizing features), and even simplifying the model.

-

How I learned about overfitting (business case): I was making a forecast model using linear regression. The model had dozens of features: lags, external regressors, economic features, calendar features... You name it, I included it. And the model did well (on the training data). The problem came when I put my first forecast model into production...

-

Lack of Stability (is a nice way to put it): My model went out-of-wack. The linear regression predicted demand for certain products 100X more than it's recent trends. And luckily the demand planner called me out on it before the purchase orders went into effect.

-

I learned a lot from this: Linear regression models can be highly sensitive. I switched to penalized regression (elastic net) and the model became much more stable. Luckily my organization knew I was onto something, and I was given more chances to improve.

-

The end result: We actually called the end of the Oil Recession of 2016 with my model, and workforce planning was ready to meet the increased demand. This saved us 3 months of inventory time and put us in a competitive advantage when orders began ramping up.

Estimated savings: 10% of sales x 3 months = $6,000,000.

Pretty shocking what a couple data science skills can do for a business.

Which one is the best classification algorithm?

Don't forget this line:

'All models are wrong, but some models are useful.' - George Box

Here are 5 classification models to start with ?

-

Logistic Regression

LR is mainly used for binary classifications, such as 'yes' or 'no' cases.

The output is between 0 and 1, so it can be translated into a probability.

It's effective with simple problems but may struggle with complex ones. -

Decision Trees

Tree-based models split the data into different subsets based on the input.

It's easy to visualize and follow each step and see how the model works.

They are simple and effective, but be careful with overfitting! -

Random Forest

Random Forest builds multiple decision trees to improve accuracy.

It's great for large datasets and reduces the risk of overfitting.

Each tree in the forest has a so-called vote, and the majority vote decides the outcome. -

Support Vector Machines (SVM)

SVM is effective for both linear and non-linear classification.

It works effectively when there is a clear margin between categories, but it also leaves some room for error.

It can be computationally expensive. -

K-Nearest Neighbors (KNN)

KNN classifies data based on the closest neighboring points.

It may be a struggle to find the optimal K value in the model.

Yet it's simple and effective with small datasets.

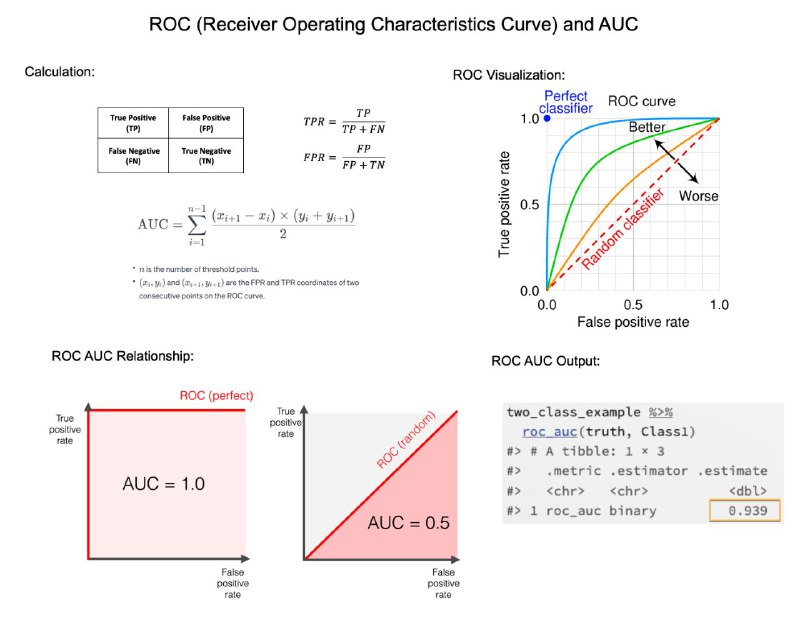

ROC and AUC are important concepts for evaluating classification models in business (e.g. lead scoring). In 6 minutes, I'll share what took me 60 days to figure out. Let's dive in.

-

ROC Curve: The ROC curve, which stands for Receiver Operating Characteristic curve, is a graphical representation used to evaluate the performance of a binary classifier system as its discrimination threshold is varied.

-

True Positive Rate (TPR): On the y-axis, the ROC curve plots the True Positive Rate (also known as sensitivity, or recall) which measures the proportion of actual positives that are correctly identified as such. It's calculated as TPR = TP / (TP + FN), where TP is true positives and FN is false negatives.

-

False Positive Rate (FPR): On the x-axis, the curve plots the False Positive Rate, which measures the proportion of actual negatives that are incorrectly identified as positives. It's calculated as FPR = FP / (FP + TN), where FP is false positives and TN is true negatives.

-

Thresholds: The ROC curve is created by plotting TPR against FPR at various threshold settings. A threshold in a classification algorithm is a point at which the decision is made whether a given instance belongs to a certain class.

-

Area Under the Curve (AUC): The area under the ROC curve is a measure of the effectiveness of a binary classification algorithm. An AUC of 1 represents a perfect classifier, while an AUC of 0.5 represents a worthless classifier.

-

AUC Calculation: The most common method for calculating the AUC of an ROC curve is by using the trapezoidal rule. This approach involves approximating the area under the curve by summing up the areas of trapezoids formed beneath the curve.

-

Interpretation: A curve closer to the top-left corner indicates a better performance. As the area under the ROC curve increases, the model is better at distinguishing between the positive and negative classes.

Community chat: https://t.me/hamster_kombat_chat_2

Website: https://hamster.network

Twitter: x.com/hamster_kombat

YouTube: https://www.youtube.com/@HamsterKombat_Official

Bot: https://t.me/hamster_kombat_bot

Last updated 11 months, 1 week ago

Your easy, fun crypto trading app for buying and trading any crypto on the market.

📱 App: @Blum

🤖 Trading Bot: @BlumCryptoTradingBot

🆘 Help: @BlumSupport

💬 Chat: @BlumCrypto_Chat

Last updated 1 year, 4 months ago

Turn your endless taps into a financial tool.

Join @tapswap_bot

Collaboration - @taping_Guru

Last updated 11 months, 4 weeks ago