The AI & Quantum Computing Chronicle

For any suggestion/question:

Twitter: @ItalyHighTech/@KevinClarity

Telegram: @vzocca/@kcorella

Official Telegram Channel by Sarkari Result SarkariResult.Com

Welcome to this official Channel of Sarkari Result SarkariResult.Com - On this page you will get all the updated information on Sarkari Result website from time to time.

Last updated 1 year ago

?Only Current Affairs English & Hindi Medium.

Contact @GKGSAdminBot

Channel Link- https://t.me/+wytqxfcVInNjN2E1

By Chandan Kr Sah

Email- [email protected]

Must Subscribe Us On YouTube - https://youtube.com/channel/UCuxj11YwYKYRJSgtfYJbKiw

Last updated 2 years, 10 months ago

✆ Contact ? @Aarav723

#UPSC, #SSC , #CGL #BPSC #STATE #PET #Banking, #Railway, #Mppsc, #RRB, #IBPS, #Defence, #Police, #RBI etc.

??This Channel Has Been Established With The Aim Of Providing Proper Guidance To Youths Preparing For All Govt Exam

Last updated 1 year, 2 months ago

Shannon entropy is a core concept in machine learning and information theory, particularly in decision tree modeling. To date, no studies have extensively and quantitatively applied Shannon entropy in a systematic way to quantify the entropy of clinical situations using diagnostic variables (true and false positives and negatives, respectively). Decision tree representations of medical decision-making tools can be generated using diagnostic variables found in literature and entropy removal can be calculated for these tools. This concept of clinical entropy removal has significant potential for further use to bring forth healthcare innovation, such as quantifying the impact of clinical guidelines and value of care and applications to Emergency Medicine scenarios where diagnostic accuracy in a limited time window is paramount. https://www.nature.com/articles/s41598-024-51268-4

Nature

Entropy removal of medical diagnostics

Scientific Reports - Entropy removal of medical diagnostics

Physicists have long struggled to pin down the details of this process. Now, a team of four researchers has that entanglement doesn’t just weaken as temperature increases. Rather, in mathematical models of quantum systems such as the arrays of atoms in physical materials, there’s always a specific temperature above which it vanishes completely. “It’s not just that it’s exponentially small,” said of the Massachusetts Institute of Technology, one of the authors of the new result. “It’s zero.” https://www.quantamagazine.org/computer-scientists-prove-that-heat-destroys-entanglement-20240828/

Quanta Magazine

Computer Scientists Prove That Heat Destroys Quantum Entanglement

While devising a new quantum algorithm, four researchers accidentally established a hard limit on the “spooky” phenomenon.

Quantum computers are designed to outperform standard computers by running quantum algorithms. Areas in which quantum algorithms can be applied include cryptography, search and optimisation, simulation of quantum systems and solving large systems of linear equations. Here we briefly survey some known quantum algorithms, with an emphasis on a broad overview of their applications rather than their technical details. We include a discussion of recent developments and near-term applications of quantum algorithms. https://www.nature.com/articles/npjqi201523

Nature

Quantum algorithms: an overview

npj Quantum Information - Quantum algorithms: an overview

What Is Spacetime?

General relativity predicts that matter falling into a black hole becomes compressed without limit as it approaches the center—a mathematical cul-de-sac called a singularity. Theorists cannot extrapolate the trajectory of an object beyond the singularity; its time line ends there. Even to speak of “there” is problematic because the very spacetime that would define the location of the singularity ceases to exist. Researchers hope that quantum theory could focus a microscope on that point and track what becomes of the material that falls in. https://www.nature.com/articles/d41586-018-05095-z

Nature

What Is Spacetime?

Nature - Physicists believe that at the tiniest scales, space emerges from quanta. What might these building blocks look like?

In this article, the authors have proposed an adaptation of two cutting-edge concepts in the field of deep learning, namely the Transformer architecture and ??? ?????? ??????????-?????? ??????? through its version. ??? ??????? ???????? ???? ? ???? ??????????? ???? ???????? ??????? ??? ??????????? ???? ???? ?? ????????? ?? ???????? ???? ?????? ??? ??????????? ????????????. With their specific learning task, different from that used for the Temporal Fusion Transformer, ???? ???? ???? ??? ???????????? ????? ?? ?? ???????? ???? ?? ???? ?? ??????? ?????? ???????????. Their approach clearly outperforms the standard method - https://arxiv.org/pdf/2406.02486v2

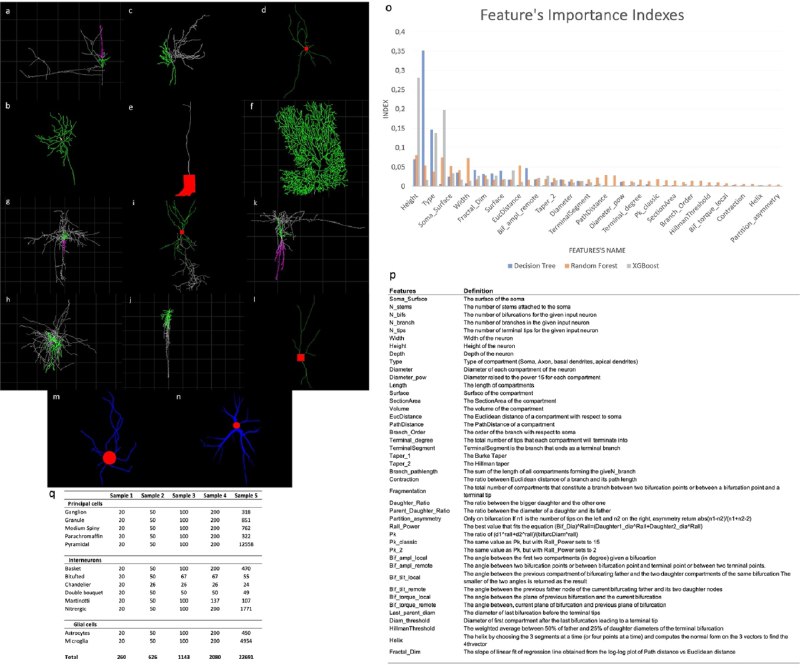

Despite the inherent complexity and challenges that neuroscientists must deal with while addressing neuronal classification, numerous reasons exist for interest in this topic. Some brain diseases affect specific cell types. Neuron morphology studies may lead to the identification of genes to target for specific cell morphologies and the functions linked to them. A neuron undergoes different stages of development before acquiring its ultimate structure and function, which must be understood to identify new markers, marker combinations, or mediators of developmental choices. Understanding neuron morphology represents the basis of the modeling effort and the data-driven modeling approach for studying the impact of a cell’s morphology on its electrical behavior and function as well as on the network dynamics that the cell belongs to. https://www.nature.com/articles/s41598-023-38558-z

Nature

Application of quantum machine learning using quantum kernel algorithms on multiclass neuron M-type classification

Scientific Reports - Application of quantum machine learning using quantum kernel algorithms on multiclass neuron M-type classification

Typically, when engineers build machine learning models out of neural networks composed of units of computation called artificial neurons they tend to stop the training at a certain point, called the overfitting regime. This is when the network basically begins memorizing its training data and often won’t generalize to new, unseen information. But when the OpenAI team accidentally trained a small network way beyond this point, it seemed to develop an understanding of the problem that went beyond simply memorizing it could suddenly ace any test data.

The researchers named the phenomenon “grokking,” a term coined by science-fiction author Robert A. Heinlein to mean understanding something “so thoroughly that the observer becomes a part of the process being observed.” The overtrained neural network, designed to perform certain mathematical operations, had learned the general structure of the numbers and internalized the result. It had grokked and become the solution. https://www.quantamagazine.org/how-do-machines-grok-data-20240412/

Quanta Magazine

How Do Machines ‘Grok’ Data?

By apparently overtraining them, researchers have seen neural networks discover novel solutions to problems.

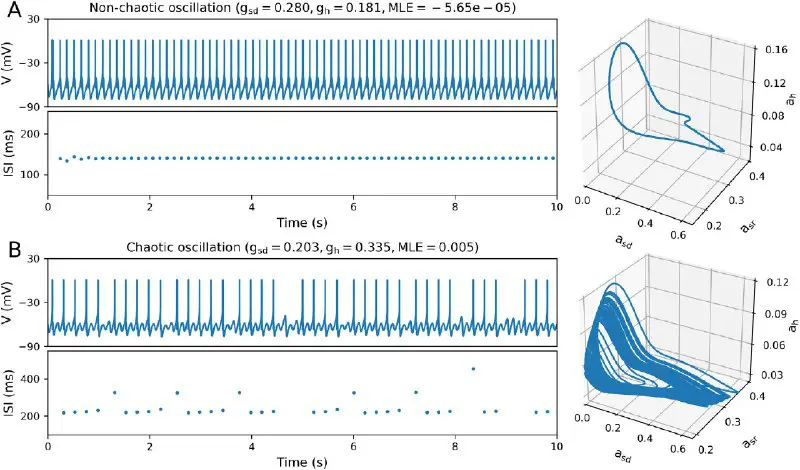

Chaotic dynamics has been shown in the dynamics of neurons and neural networks, in experimental data and numerical simulations. Theoretical studies have proposed an underlying role of chaos in neural systems. Nevertheless, whether chaotic neural oscillators make a significant contribution to network behaviour and whether the dynamical richness of neural networks is sensitive to the dynamics of isolated neurons, still remain open questions. We investigated synchronization transitions in heterogeneous neural networks of neurons connected by electrical coupling in a small world topology. The nodes in our model are oscillatory neurons that – when isolated – can exhibit either chaotic or non-chaotic behaviour, depending on conductance parameters. https://www.nature.com/articles/s41598-018-26730-9

Nature

Synchronization transition in neuronal networks composed of chaotic or non-chaotic oscillators

Scientific Reports - Synchronization transition in neuronal networks composed of chaotic or non-chaotic oscillators

The differences between human and machine learning—when it comes to language (as well as other domains)—are stark. While LLMs are introduced to and trained with trillions of words of text, human language “training” happens at a much slower rate. To illustrate, a human infant or child hears—from parents, teachers, siblings, friends and their surroundings—an average of roughly 20,000 words a day (e.g., Gilkerson et al., 2017; Hart and Risley, 2003). So, in its first five years a child might be exposed to—or “trained” with—some 36.5 million words. By comparison, LLMs are trained with trillions of tokens within a short time interval of weeks or months. The inputs differ radically in terms of quantity (sheer amount), but also in terms of their quality

But can an LLM—or any prediction-oriented cognitive AI—truly generate some form of new knowledge? We do not believe they can. One way to think about this is that an LLM could be said to have “Wiki-level knowledge” on varied topics in the sense that these forms of AI can summarize, represent, and mirror the words (and associated ideas) it has encountered in myriad different and new ways. On any given topic (if sufficiently represented in the training data), an LLM can generate indefinite numbers of coherent, fluent, and well-written Wikipedia articles. But just as a subject-matter expert is unlikely to learn anything new about their specialty from a Wikipedia article within their domain, so an LLM is highly unlikely to somehow bootstrap knowledge beyond the combinatorial possibilities of the data and word associations it has encountered in the past.

AI is anchored on data-driven prediction. We argue that AI’s data and prediction-orientation is an incomplete view of human cognition. While we grant that there are some parallels between AI and human cognition—as a (broad) form of information processing—we focus on key differences. We specifically emphasize the forward-looking nature of human cognition and how theory-based causal logic enables humans to intervene in the world, to engage in directed experimentation, and to problem solve. -

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4737265

Ssrn

Theory Is All You Need: AI, Human Cognition, and Decision Making

Artificial intelligence (AI) now matches or outperforms human intelligence in an astonishing array of games, tests, and other cognitive tasks that involve high-

Diffusion Models From Scratch. Here, we'll cover the derivations from scratch to provide a rigorous understanding of the core ideas behind diffusion. What assumptions are we making? What properties arise as a result? - https://www.tonyduan.com/diffusion/index.html

Official Telegram Channel by Sarkari Result SarkariResult.Com

Welcome to this official Channel of Sarkari Result SarkariResult.Com - On this page you will get all the updated information on Sarkari Result website from time to time.

Last updated 1 year ago

?Only Current Affairs English & Hindi Medium.

Contact @GKGSAdminBot

Channel Link- https://t.me/+wytqxfcVInNjN2E1

By Chandan Kr Sah

Email- [email protected]

Must Subscribe Us On YouTube - https://youtube.com/channel/UCuxj11YwYKYRJSgtfYJbKiw

Last updated 2 years, 10 months ago

✆ Contact ? @Aarav723

#UPSC, #SSC , #CGL #BPSC #STATE #PET #Banking, #Railway, #Mppsc, #RRB, #IBPS, #Defence, #Police, #RBI etc.

??This Channel Has Been Established With The Aim Of Providing Proper Guidance To Youths Preparing For All Govt Exam

Last updated 1 year, 2 months ago