Graph Machine Learning

If you have something worth sharing with the community, reach out @gimmeblues, @chaitjo.

Admins: Sergey Ivanov; Michael Galkin; Chaitanya K. Joshi

Telegram stands for freedom and privacy and has many easy to use features.

Last updated 12 months ago

Sharing my thoughts, discussing my projects, and traveling the world.

Contact: @borz

Last updated 11 months, 4 weeks ago

Official Graph Messenger (Telegraph) Channel

Download from Google Play Store:

https://play.google.com/store/apps/details?id=ir.ilmili.telegraph

Donation:

https://graphmessenger.com/donate

Last updated 1 year, 7 months ago

Graph Machine Learning @ ICML 2022

In case you missed all the ICML’22 fun, we prepared a comprehensive overview of graph papers published at the conference: 35+ papers in 10 categories:

- Generation: Denoising Diffusion Is All You Need

- Graph Transformers

- Theory and Expressive GNNs

- Spectral GNNs

- Explainable GNNs

- Graph Augmentation: Beyond Edge Dropout

- Algorithmic Reasoning and Graph Algorithms

- Knowledge Graph Reasoning

- Computational Biology: Molecular Linking, Protein Binding, Property Prediction

- Cool Graph Applications

Medium

Graph Machine Learning @ ICML 2022

Recent advancements and hot trends, July 2022 edition

Upcoming Graph Workshops

If you are finishing a project and would like to probe your work and get the first round of reviews, consider submitting to recently announced workshops:

- Federated Learning with Graph Data (FedGraph) @ CIKM 2022 - deadline August 15

- Trustworthy Learning on Graphs (TrustLOG) @ CIKM 2022 - deadline September 2

- New Frontiers in Graph Learning (GLFrontiers) @ NeurIPS 2022 - deadline September 15

- Symmetry and Geometry in Neural Representations (NeurReps) @ NeurIPS 2022 - deadline September 22

FedGraph2022

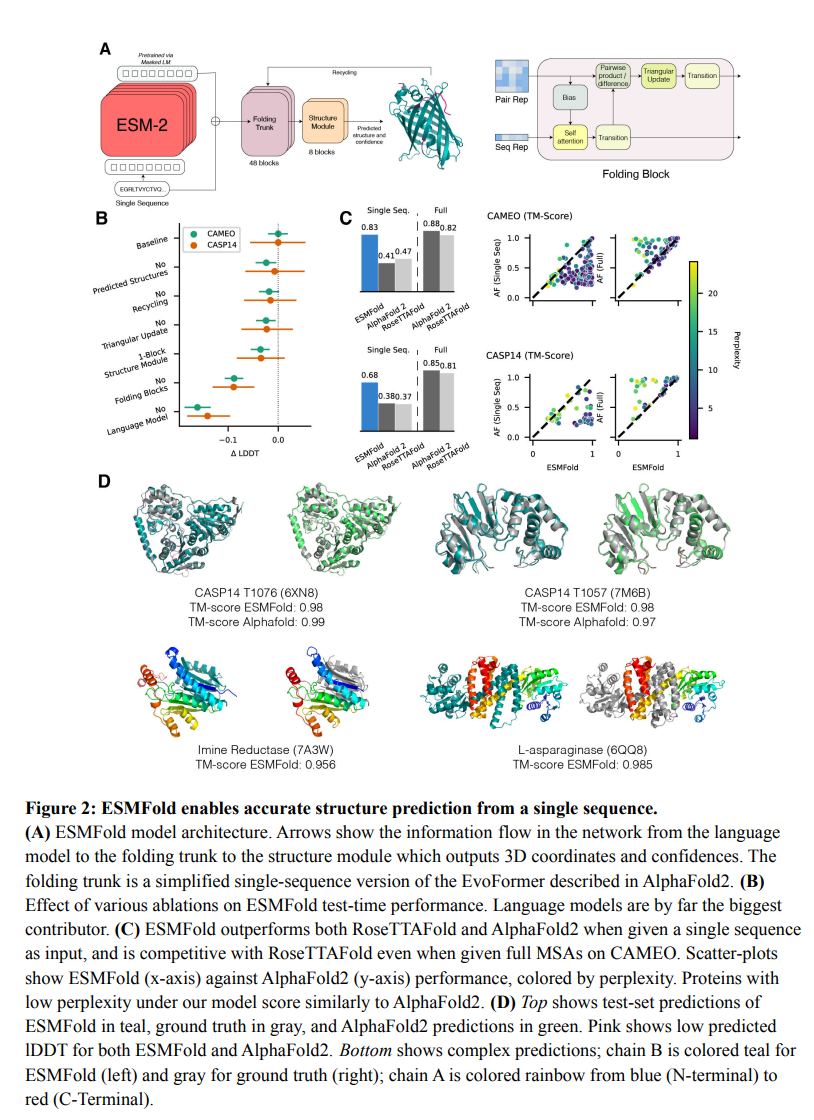

ESMFold: Protein Language Models Solve Folding, Too

Today, Meta AI Protein Team announced ESMFold - a protein folding model that uses representations right from a protein LM. Meta AI has been working on BERT-style protein language models for a while, e.g., they created a family of ESM models that are currently SOTA in masked protein sequence prediction tasks.

“A key difference between ESMFold and AlphaFold2 is the use of language model representations to remove the need for explicit homologous sequences (in the form of an MSA) as input.”

To this end, the authors design a new family of protein LMs ESM-2. ESM-2 are much more parameter efficient compared to ESM-1b, e.g., 150M ESM-2 is on par with 650M ESM-1b, and 15B ESM-2 leaves all ESM-1 models far behind. Having pre-trained an LM, ESMFold applies Folding Trunk blocks (simplified EvoFormer blocks from AlphaFold 2) and yields 3D predictions.

ESMFold outperforms AlphaFold and RoseTTAFold when only given a single-sequence input w/o MSAs and also much faster! Check out the attached illustration with architecture and charts.

“On a single NVIDIA V100 GPU, ESMFold makes a prediction on a protein with 384 residues in 14.2 seconds, 6X faster than a single AlphaFold2 model. On shorter sequences we see a ~60X improvement. … ESMFold can be run reasonably quickly on CPU, and an Apple M1 Macbook Pro makes the same prediction in just over 5 minutes.”

Finally, ESMFold shows remarkable scaling properties:

“We see non-linear improvements in protein structure predictions as a function of model

scale, and observe a strong link between how well the language model understands a sequence (as measured by perplexity) and the structure prediction that emerges.”

Are you already converted to the church of Scale Is All You Need - AGI Is Coming? ?

**ESMFold: Protein Language Models Solve Folding, Too**](/media/attachments/gra/graphml/693.jpg)

Origins of Geometric Deep Learning - Part 2 and 3

A while ago we referenced the first article of the series on the Origins of Geometric DL by Michael Bronstein. Recently, the series got new episodes - Part 2 focuses on the high hopes about the perceptron, the curse of dimensionality, and first AI winters. Part 3 introduces first architectures with baked geometrical priors - the neocognitron (precursor of convnets) and convolutional neural networks.

As always, Michael did a great and meticulous job of finding original references and adding some comments to them - often the references section is as interesting and informative as the main text! ?

Medium

Towards Geometric Deep Learning II: The Perceptron Affair

In the second post of our series “Towards Geometric Deep Learning” we discuss how the criticism of Perceptrons led to geometric insights

ICML 2022 - Graph Workshops

ICML starts today with the full week of tutorials, main talks, and workshops. While we are preparing a blog post about interesting graph papers, you can already check the contents of graph- and related workshops to be held on Friday and Saturday.

- Topology, Algebra, and Geometry in Machine Learning (TAG in ML)

- Knowledge Retrieval and Language Models (KRLM)

- Beyond Bayes: Paths Towards Universal Reasoning Systems

- Machine Learning in Computational Design

Tagds

TAG in Machine Learning

Call for Papers Much of the data that is fueling current rapid advances in machine learning is: high dimensional, structurally complex, and strongly nonlinear. This poses challenges for researcher intuition when they ask (i) how and why current algorithms…

TensorFlow GNN

TensorFlow GNN (TF-GNN) is a new scalable library for Graph Neural Networks in TensorFlow. It is designed from the bottom up to support the kinds of rich heterogeneous graph data that occurs in real-life use-cases. Many production models at Google use TF-GNN and it has been recently released as an open source project. Google has released a paper that describe the TF-GNN data model, its Keras modeling API, and relevant capabilities such as graph sampling, distributed training, and accelerator support. A new version was just pushed to GitHub.

GitHub

GitHub - tensorflow/gnn: TensorFlow GNN is a library to build Graph Neural Networks on the TensorFlow platform.

TensorFlow GNN is a library to build Graph Neural Networks on the TensorFlow platform. - GitHub - tensorflow/gnn: TensorFlow GNN is a library to build Graph Neural Networks on the TensorFlow platform.

Graph ML Workshops and Summer Schools ?? ?? ????

This week is surprisingly well-packed with physical meetings of the GraphML community with top speakers and lecturers. We would expect all the materials to be recorded and available online.

- London Geometry and ML Summer School (??)

- Deep Exploration of non-Euclidean Data with Geometric and Topological Representation Learning (??)

- Swiss Equivariant Machine Learning Workshop (??)

Also, in 2 weeks there is going to be Italian Summer School on Geometric DL (??).

LOGML

LOGML 2022 | London Geometry and Machine Learning

The London Geometry and Machine Learning Summer School 2022 (LOGML) aims to bring together geometers and machine learners to work together on a variety of problems. There will be a selection of group projects, each overseen by an experienced mentor, talks…

? New Course: An Introduction to Group Equivariant Deep Learning

Erik Bekkers from University of Amsterdam created a fantastic new course covering the most up-to-date flavor of GNNs, namely, equivariant and group-equivariant GNNs. The course consists of 3 lectures, starts from the introduction to the group theory, gradually comes to equivariance and steerable kernels, covers tensor products and irreducible representations (hello Wigner matrices). After the course, you won’t be afraid of cryptic abbreviation like SO(3) or E(n)!

The course includes a YouTube playlist, slides, lecture notes, and Colab notebooks to play around with the real code.

If you got inspired by this topic, we highly recommend the upcoming course by Joey Bose (Mila and McGill) on Geometry and Generative Models with even deeper study of manifolds (hyperbolic, spherical, product) to normalizing flows, ODEs, and denoising diffusion models.

uvagedl.github.io

UvA - An Introduction to Group Equivariant Deep Learning

Materials for the group equivariant deep learning course

Recap: Fields Medal & Graph Theory, Origins of Geometric Deep Learning

- Fields Medal is often considered “the Nobel Prize in mathematics”. This year, International Mathematical Union (IMU) announced 4 awardees: brilliant mathematicians Hugo Duminil-Copin (Université de Genève and IHÉS), June Huh (Princeton), James Maynard (Oxford), and Maryna Viazovska (EPFL).

It is heartwarming for channel’s editors that the research of June Huh has direct connections to the graph theory - first, he proved the 40-years-unsolved Read’s conjecture on counting ways to color the graph using chromatic polynomials, studied those polynomials even deeper, and generalized the framework to matroids. Check this wonderful Quanta Magazine’s article dedicated to June and his research.

- Just in case you had all your browser tabs closed and looked for something new to read - Michael Bronstein comes to help and publishes a new blog on the origins of Geometric Deep Learning. This is going to be a series of articles tracing the history of geometry from Greeks to GNNs.

**Recap: Fields Medal & Graph Theory, Origins of Geometric Deep Learning**](/media/attachments/gra/graphml/687.jpg)

Telegram stands for freedom and privacy and has many easy to use features.

Last updated 12 months ago

Sharing my thoughts, discussing my projects, and traveling the world.

Contact: @borz

Last updated 11 months, 4 weeks ago

Official Graph Messenger (Telegraph) Channel

Download from Google Play Store:

https://play.google.com/store/apps/details?id=ir.ilmili.telegraph

Donation:

https://graphmessenger.com/donate

Last updated 1 year, 7 months ago